Highlights from ICML 2016

Thursday July 7, 2016

I was fortunate to attend the International Conference on Machine Learning in New York from June 19-24 this year. There were walls and walls of multi-arm bandit posters (etc.) but I mostly checked out neural net, deep learning things. Here are my highlights.

In general: People are doing so many interesting things! Wow. Too many things to try. Architectures are more complex and more flexibly experimented with, it seems. And everybody loves batch norm. I didn't see anything that seemed like a huge conceptual breakthrough that would change the direction of the field, but maybe I'm wishing for too much, maybe the field doesn't need such a thing, or maybe it was there and I missed it!

For my breakdown of the conference structure itself, see Conferences: What is the Deal?.

Flashiest paper award: Generative Adversarial Text to Image Synthesis is a system where you put in text and the computer makes a picture.

Good job, computer!

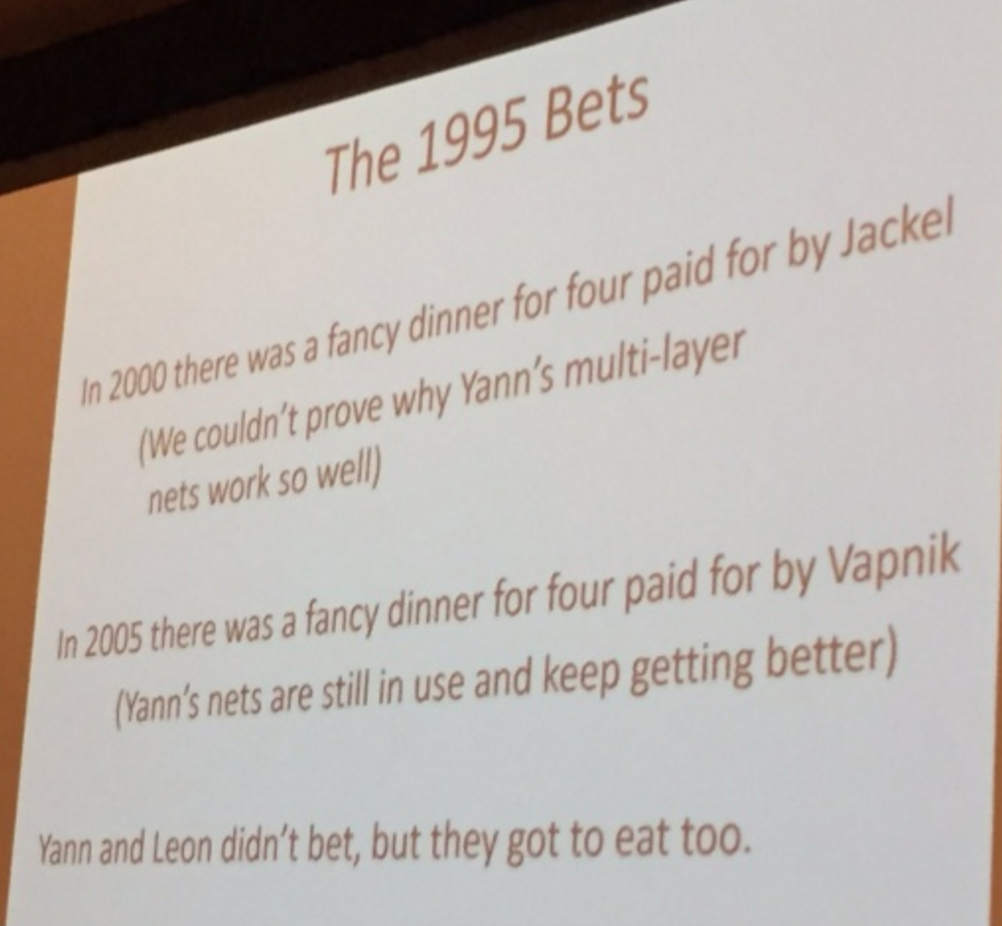

Best talk award: Machine Learning Applications at Bell Labs, Holmdel was Larry Jackel's history of neural nets, starting back in 1976. Great perspective and personal stories, presented as part of Neural Nets Back to the Future.

Yes, Jackel's bets involve the V of VC Dimension, the Le of LeNet, and SGD-master Bottou.

The first tutorial I heard was from Kaiming He on Deep Residual Networks. ResNets and their cousins (like highway networks) succeed essentially because wiggling a little tends to be better than going somewhere entirely different.

The intuition: It's hard to train deeper networks. But they ought to be better! Well, if we take a net and add another layer that encodes the identity, that's deeper, and exactly as good! What if all the layers were like the identity plus a little bit that's learned?

I was also impressed that, for example, a 152-layer ResNet can have fewer parameters than a 16-layer VGG net. Models aren't always getting more complex in terms of number of parameters!

Slightly more: If you're careful where you put nonlinearities, it's like you're always adding instead of multiplying! Stacking two convolutional layers before "reconnecting" works better in practice than doing one or three!

Boom: you can train a model with a thousand layers.

The second tutorial I heard was from Jason Weston on Memory Networks for Language Understanding. Interesting references:

- A Roadmap towards Machine Intelligence

- Teaching Machines to Read and Comprehend

- Towards AI-Complete Question Answering: A Set of Prerequisite Toy Tasks

- bAbI natural language tasks

It was neat seeing what can and can't be done currently for the "toy tasks." And Weston was stylish.

The third tutorial I heard was David Silver's on Deep Reinforcement Learning (with bonus deck on AlphaGo). Reinforcement learning is a big deal and getting bigger, it seems. I should really get into it more. Silver has course materials on his site. Also, OpenAI is into it, and they have a whole OpenAI Gym where you can play with things. Neat!

I liked the problem statement and solution of CReLUs. Too briefly: With ReLUs you tend to get paired positive/negative filters because one filter can't represent both. So build pairing into your system, and things work better. Very cogent content. Paper: Understanding and Improving Convolutional Neural Networks via Concatenated Rectified Linear Units

On sizing layers, an SVD approach based on statistical structure in the weights was interesting. Paper: A Deep Learning Approach to Unsupervised Ensemble Learning

Discrete Deep Feature Extraction: A Theory and New Architectures is a little math-heavy but close to interesting ideas about understanding and using the internals of neural networks.

Autoencoding beyond pixels using a learned similarity metric does neat things using the internals of a network for more network work - it might be metaphorically "reflective" - and comes up with some flashy visual results, too.

Semi-Supervised Learning with Generative Adversarial Networks shows that a fairly natural idea for GANs does in fact work: you can use labels like "real_a, real_b, real_c, fake" instead of just "real, fake".

We show that this method can be used to create a more data-efficient classifier and that it allows for generating higher quality samples than a regular GAN.

Fixed Point Quantization of Deep Convolutional Networks was pretty neat, if you're into that kind of thing.

I really liked the Neural Nets Back to the Future workshop.

I was so interested in the historical perspective from the experienced people presenting, for a while I was tweeting every slide.

From pictures I took (and tweeted) I reconstructed slides for "Machine Learning Applications at Bell Labs, Holmdel" from Larry Jackel, as mentioned above.

I also tweeted all through Gary Tesauro's talk, but thought it was of less general interest.

Patrice Simard's Backpropagation without Multiplication was fascinating, and much better for his commentary. For example:

Discretizing the gradient is potentially very dangerous. Convergence may no longer be guaranteed, learning may hecome prohibitively slow, and final performance after learning may be be too poor to be interesting,

Simard said that quote from the paper isn't really true; you can just use his method without worrying about that. This is a quote I took down as he was speaking:

Gradient descent is so robust to errors in the gradient, your code probably has bugs, but you don't need to fix them.

During a panel discussion, Yann LeCun said max pooling makes adversarial training unstable, and so it's better to use strided convolution if you want to downsample.

Later I noticed related sentiment in Karpathy's notes:

Getting rid of pooling. Many people dislike the pooling operation and think that we can get away without it. For example, Striving for Simplicity: The All Convolutional Net proposes to discard the pooling layer in favor of architecture that only consists of repeated CONV layers. To reduce the size of the representation they suggest using larger stride in CONV layer once in a while. Discarding pooling layers has also been found to be important in training good generative models, such as variational autoencoders (VAEs) or generative adversarial networks (GANs). It seems likely that future architectures will feature very few to no pooling layers.

Barak Pearlmutter gave a talk about automatic differentiation and a really fast system called vlad that I think runs on stalin. Pretty impressive speed! Following up later he did add:

keep in mind that if all your computations are actually array operations on the GPU, which takes all the time, then the overhead of the AD system might not really matter since it's just scheduling the appropriate derivative-computing array operations on the GPU.

The next day Ryan Adams gave a talk on the same topic at the AutoML workshop featured a Python autograd package that seemed to have some of the features (closure, for example) as vlad.

Everybody loves automatic differentiation!

I was briefly at the Workshop on On-Device Intelligence but ended up wandering.

I visited the Data-Efficient Machine Learning workshop and saw Gelman's amusing talk called Toward Routine Use of Informative Priors.

I spent some time at the Machine Learning Systems workshop.

Unfortunately I missed Yangqing Jia's Towards a better DL framework: Lessons from Brewing Caffe.

I did see Design Patterns for Real-World Machine Learning Systems, at which I was impressed at the number of named machine learning design patterns at Netflix.

I closed out the conference at the AutoML workshop. Of possible interest in connection with this topic is a recent DARPA effort.